View our Webinar on Demand: Apply Anycast Best Practices for Resilient & Performant Global Applications

View our Webinar on Demand: Apply Anycast Best Practices for Resilient & Performant Global Applications

It’s been an incredibly energizing time at NVIDIA GTC, where innovations in AI and partner connections reminded me of running ISPs back in the ’90s. That same pioneering spirit made me think of NetActuate co-founder Mark Price’s “The Serial Port” —showcasing early networking creativity. Today, we’re moving at an even more intense pace, with AI standing front and center.

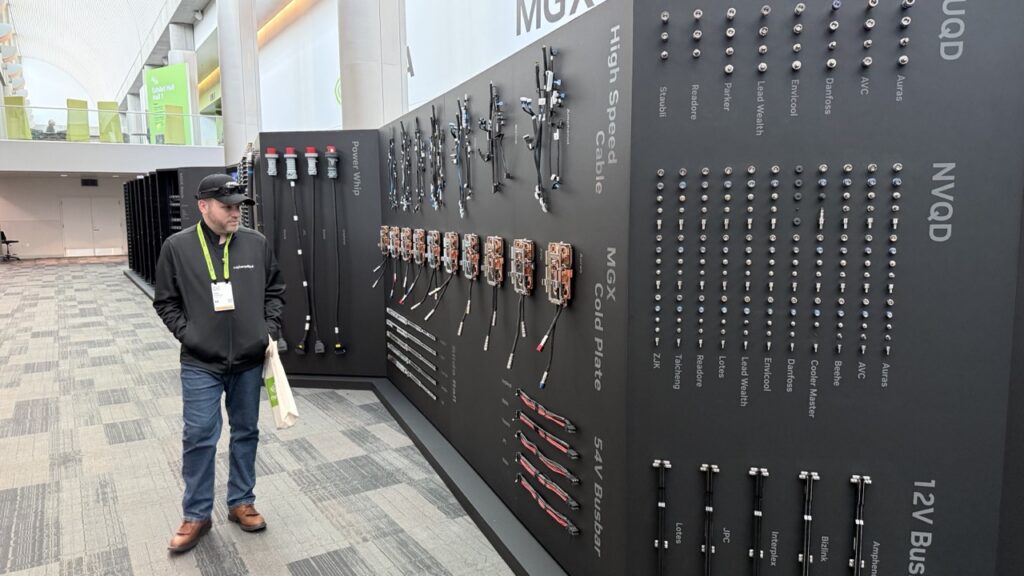

Coherently is a new startup backed by NetActuate—and while we’re new in name, our core team brings 50 years of combined expertise in multi-tenant cloud infrastructure. We’re working with NVIDIA NCP(s), CSP(s), and other partners to build the stack that enables “AI factories,” and we’re aggressively hiring to keep up with demand.

If you’re deploying NVIDIA GPUs—on-prem, cloud, or air-gapped—looking for a “cloud in a box” AWS-style solution, reach out.

NetActuate itself has decades of experience managing some of the world’s most demanding distributed workloads—often billions of transactions per second. In my recent Forbes article, How Increasing Global Demand And Competition Are Driving The Edge Revolution, I explained how skyrocketing demand, fierce hardware competition, and strategic global partnerships are fueling a new era for AI and edge computing.

As more businesses and governments realize that remaining competitive requires significant investments in advanced infrastructure—ranging from nuclear power initiatives to nuanced international collaborations—the winners will be those who seize innovation head-on. This mindset is exactly what NetActuate and Coherently bring to the table: proven expertise and a willingness to engage globally so that AI can flourish, even under the most intensive performance and regulatory requirements.

Throughout the week, we joined multiple investor gatherings, including one at the impressive Miro Towers (built by Bayview’s Yiwen Li), hosted by Fusion Fund (Lu Zhang) and BDK Capital, plus other VCs and angels. Altogether, Coherently ended up with 90+ meetings.

One highlight was a private Developer/Partner telco event with Jensen Huang, who addressed a select group of startups and partners. He reminded us that “I can tell you for a fact that the people that I know who are successful in this journey, people with speed and agility, they get shit done fast. Instead of taking three years to build a data center or two years to build a data center and stand things up, they stand things up in months, not years, months.”

AI is reshaping telecom’s core operations, pushing data centers to act as “factories” for compute-heavy workloads. Monetizing hardware resources and advanced AI solutions is now a strategic focal point for many operators. GPU-driven architectures promise new revenue streams, not just cost savings. Collaboration and dynamic frameworks emerged as central themes.

Ronnie Vasishta (NVIDIA): Illustrating how telcos can pivot into AI production hubs, he stressed GPU-accelerated workloads and software-driven frameworks as crucial. Ronnie argued that agile, scalable methods can radically transform data center economics.

Real-world AI deployments—especially generative models—are driving measurable ROI. Speakers emphasized secure, cloud-native strategies to scale without jeopardizing data security. Ultimately, adopting AI as an enterprise-wide function rather than a pilot project accelerates innovation and profitability.

Moving away from manual “best effort,” AI-driven RAN uses machine learning for real-time orchestration. Scheduling, resource distribution, and performance optimization become adaptive and intent-based. This shift fosters both speed and reliability, particularly in advanced 5G deployments.

Serving 270M people under stringent data regulations, Indonesia proved large-scale AI can remain sovereign. Working with NVIDIA, local telcos, and healthcare innovators, they tackled language and policy hurdles effectively. The initiative reveals significant potential for AI in emerging markets. Truly a remarkable and forward thinking approach that will help drive the younger population of Indonesia forward as global innovators.

AI factories, when aligned with local infrastructure and regulations, can unlock lucrative revenue streams. Edge-scale solutions and greener data centers also address national goals—seen in Norway, Canada, and more. These examples suggest “homegrown” AI can thrive alongside global collaborations.

Generative AI avatars can handle user interactions 24/7, enhancing customer experiences, but brand trust demands accuracy. This session showcased how digital humans automate front-line support. Missteps can quickly undermine credibility, so rigorous testing is essential.

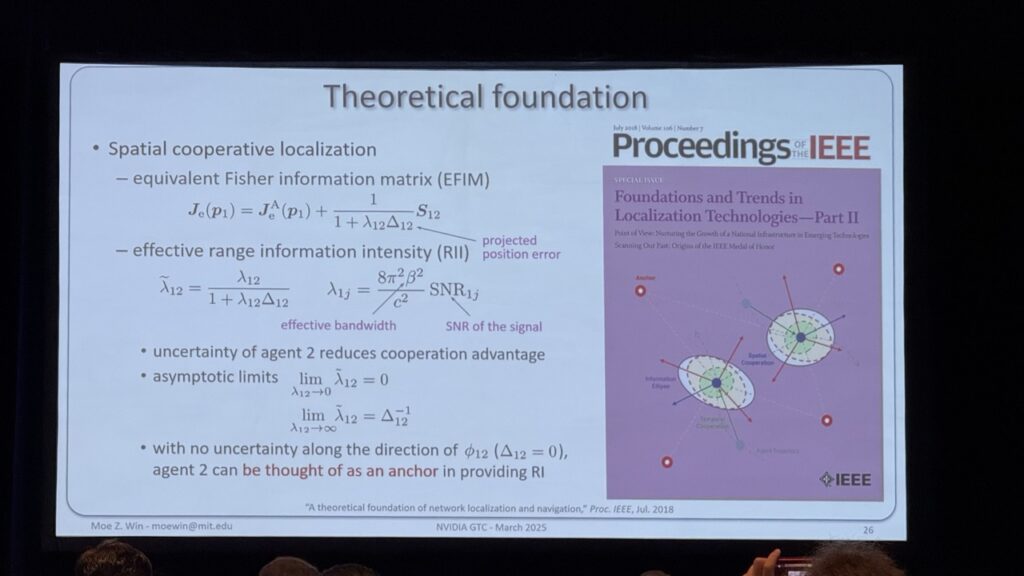

Software-based 6G will rely on AI for waveforms, HPC synergy, and precise positioning. Intelligence, not just more bandwidth, defines next-level performance. Speakers agreed that building AI in from the ground up sets 6G networks on a faster, more flexible upgrade path.

Offloading heavy tasks to DPUs and orchestrating them with Kubernetes can handle large AI workloads while maintaining security and service chaining. AI-native networking is the logical next evolution in advanced telecom, enabling automation and agility at scale.

Telcos are no longer just carriers—they’re shifting into full-fledged AI powerhouses, defining the future of connectivity. As Jensen Huang reflected, decades of computing and data centers were built around a legacy “serial” model, where old architectures turned data centers into cost centers rather than revenue generators. In the AI era, that paradigm is flipped: data centers become “factories of the future,” enabling telcos, enterprises, and cloud providers to monetize advanced compute resources and move beyond traditional network delivery.

Coherently, spun out from NetActuate’s decades of large-scale computing experience, is here to help operators and enterprises build these next-generation AI factories quickly and effectively.

Like Jensen said: computers were once locked into an outdated design, but we’re entering a new realm where agility and forward-thinking infrastructures drive real value.

If you’re looking to push AI boundaries in your own operations—transitioning from old serial models to modern, AI-native platforms—let’s team up. The opportunity is massive, and it’s accelerating by the day.

— The Coherently Team

Reach out to learn how our global platform can power your next deployment. Fast, secure, and built for scale.

.svg)